AI will stop offensive, toxic, or inappropriate comments from your application using the TensorFlow Toxicity classifier model in Javascript no need for humans to review every single word.

Your website or application is your responsibility to keep it clean of inappropriate language and It’s a heavy task. So thanks to TensorFlow.js which made it easy to implement AI models anywhere in your javascript code in frontend or backend.

AI Security Camera Using TensorFlow.js And Vue Tutorial

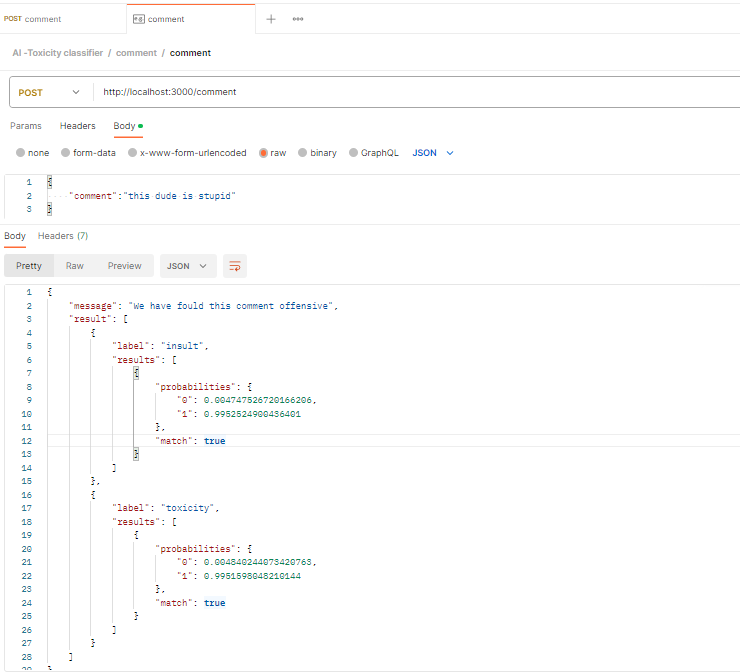

In this example tutorial we will use the AI model in the backend of node.js and express.js. By that, we can have real-world experience using AI to filter comments from the frontend via RESTful API.

Node.js Application

Let’s create a new Express application. First, create an empty directory for the project and after navigating to that directory install Express using the application generator tool, express-generator, to quickly create an application skeleton.

npx express-generatorThen install the packages

npm installOn MacOS or Linux, run the app with this command:

$ DEBUG=myapp:* npm start

On Windows Command Prompt, use this command:

> set DEBUG=myapp:* & npm start

On Windows PowerShell, use this command:

PS> $env:DEBUG='myapp:*'; npm startFinally here are many ways to start your server depending on your operating system from Express doc.

Let’s create a route to handle the comment that will be classified later with the TensorFlow.js toxicity classifier. routes\index.js.

You can test the API route (http://localhost:3000/comment) to make sure everything is ok.

var express = require('express');

var router = express.Router();

/* GET home page. */

router.get('/', function(req, res, next) {

res.render('index', { title: 'Express' });

});

/* handling the comments */

router.post('/comment', function(req, res, next) {

res.status(201).json(req.body.comment);

});

module.exports = router;

TensorFlow.js With Node.js

The fun part is here, welcome to the AI world my friend, TensorFlow has so many free and open-source pre-turned models to use with your Javascript applications and deal with them as any Javascript library as you will see here.

Let’s install the Toxicity Classifier model with TensorFlow.js in Node.js

npm install @tensorflow/tfjs @tensorflow-models/toxicity

//or

yarn add @tensorflow/tfjs @tensorflow-models/toxicityWe are ready to write our codes in routes\index.js. Toxicity has these labels for classifications

['identity_attack','insult','obscene','severe_toxicity','sexual_explicit', 'threat','toxicity']

If the model detects in the comment whether the text contains threatening language, insults, obscenities, identity-based hate, or sexually explicit language, it will classify it with one or more labels with "match": true and probabilities values.

{

"label": "insult",

"results": [{

"probabilities": [0.9659664034843445, 0.04403361141681671],

"match": false

}]

},

{

"label": "toxicity",

"results": [{

"probabilities": [0.08124706149101257, 0.9587529683113098],

"match": true

}]

},

...This is an example of the result response we get from the model with the seven labels, Let’s apply the AI model in our comment route function.

var express = require('express');

var router = express.Router();

//require('@tensorflow/tfjs');

const toxicity = require('@tensorflow-models/toxicity');

/* GET home page. */

router.get('/', function(req, res, next) {

res.render('index', { title: 'Express' });

});

/* handling the comments */

router.post('/comment', function(req, res, next) {

// The minimum prediction confidence.

const threshold = 0.9;

// Load the model.

toxicity.load(threshold).then(model => {

const sentences = req.body.comment;

model.classify(sentences).then(predictions => {

//prediction results

console.log(predictions);

// filter the predictions to get only matched predictions

const predictionArray = predictions.filter(function (el) {

if(el.results[0].match === true){

return el.label

}

});

//return a message about the predictions

let message;

predictionArray .length > 0 ? message = "We have fould this comment offensive" : message = "Good"

res.status(201).json({'message' :message, 'result': predictionArray });

});

});

});

module.exports = router;

Let’s explain

The minimum prediction confidence is 90% threshold as we load the model toxicity.load(threshold).

Then we get the comment from the request body req.body.comment to feed the TF model. we debug the result in the console to see the full predictions.

After that, we filter the predictions array to get only the matched predictions to the array predictionArray to send back to the frontend.

Finally, we make everything clear by sending a text message message that briefs the comment status.

Conclusion

It’s so simple and easy to implement AI models in your application, you can find the full doc about this model on GitHub, In this example, we send the comments to the backend to be classified, then we can make decisions about the comment as we did by sending back the brief message, we can decide if we want to remove the comment or save it in the database or block the user, all that without needing a human review.

I hope that was helpful to you, thanks.